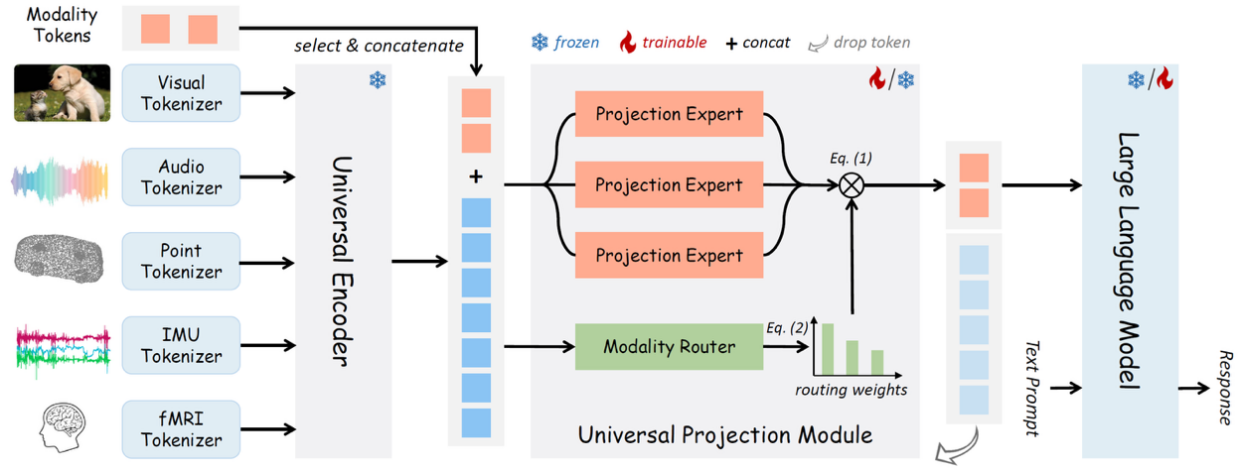

Reasoning across Multiple Data Modalities

The primary goal of this project in the coming year, building upon the outcomes of last year, is to empower multi-modal large language models with reasoning abilities across various data modalities. First, we are going to design reinforcement learning algorithms to improve the single-modal reasoning ability on various data modalities. Second, we will build a unified model that supports multi-modal multi-task learning. Paired data and unpaired data across modalities and tasks will be utilized during the training process. Finally, we will incorporate the designed reinforcement learning algorithms into the unified large language model to further improve the reasoning capabilities of it across various modalities and tasks.